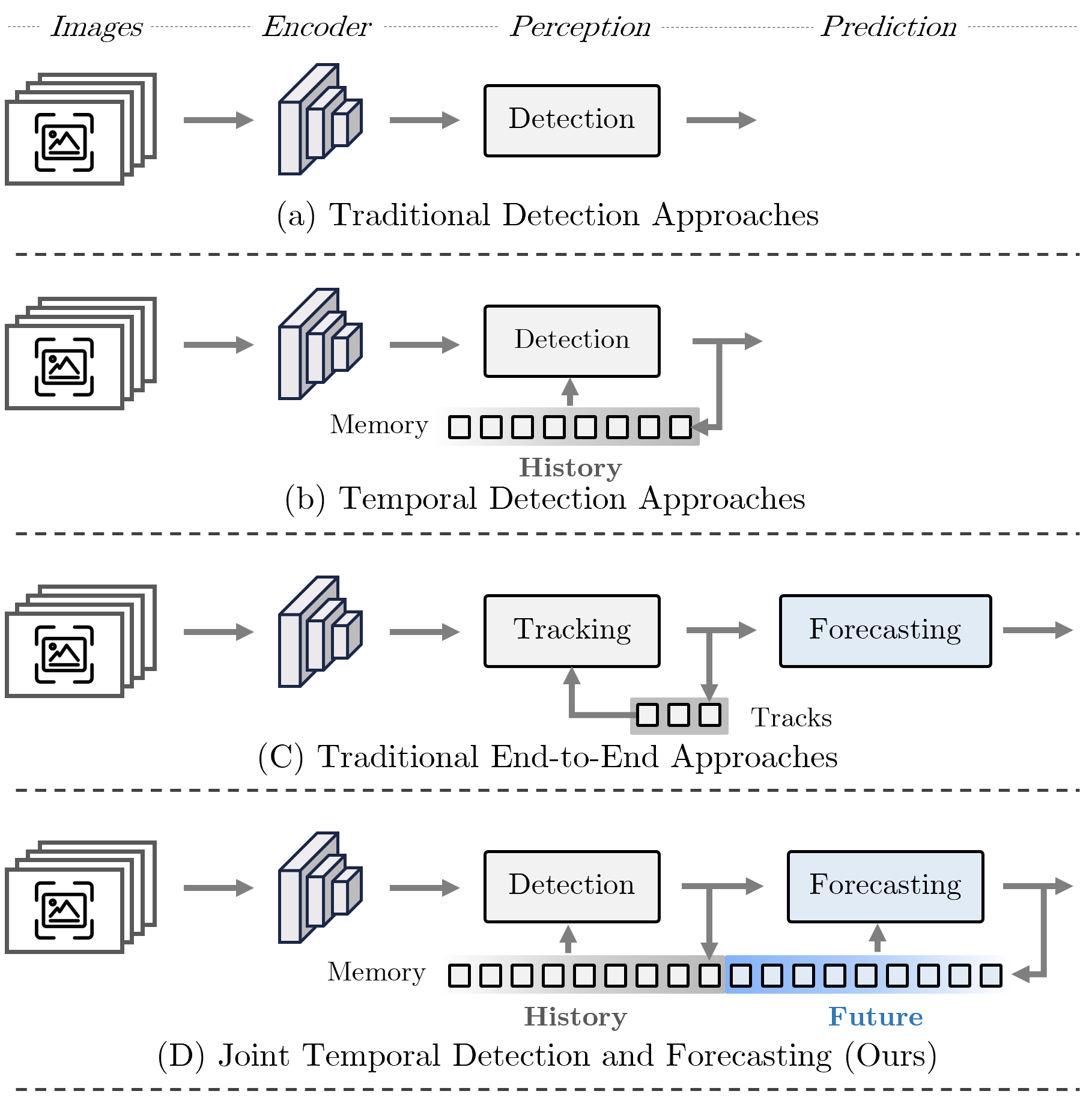

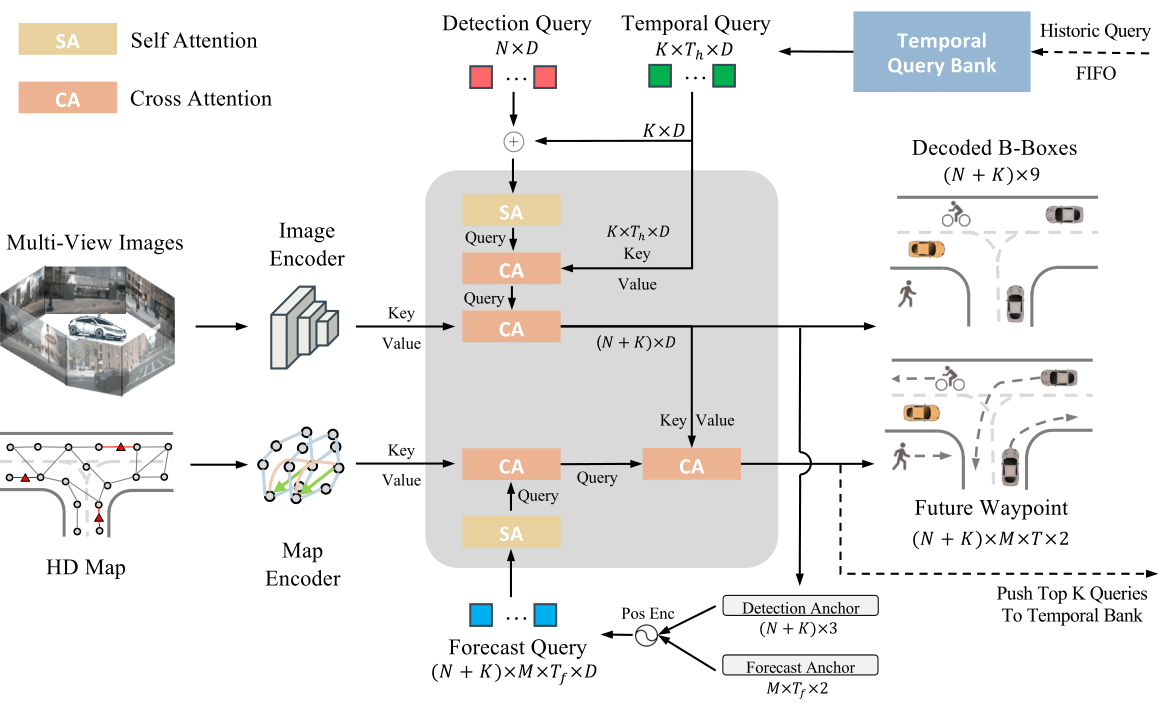

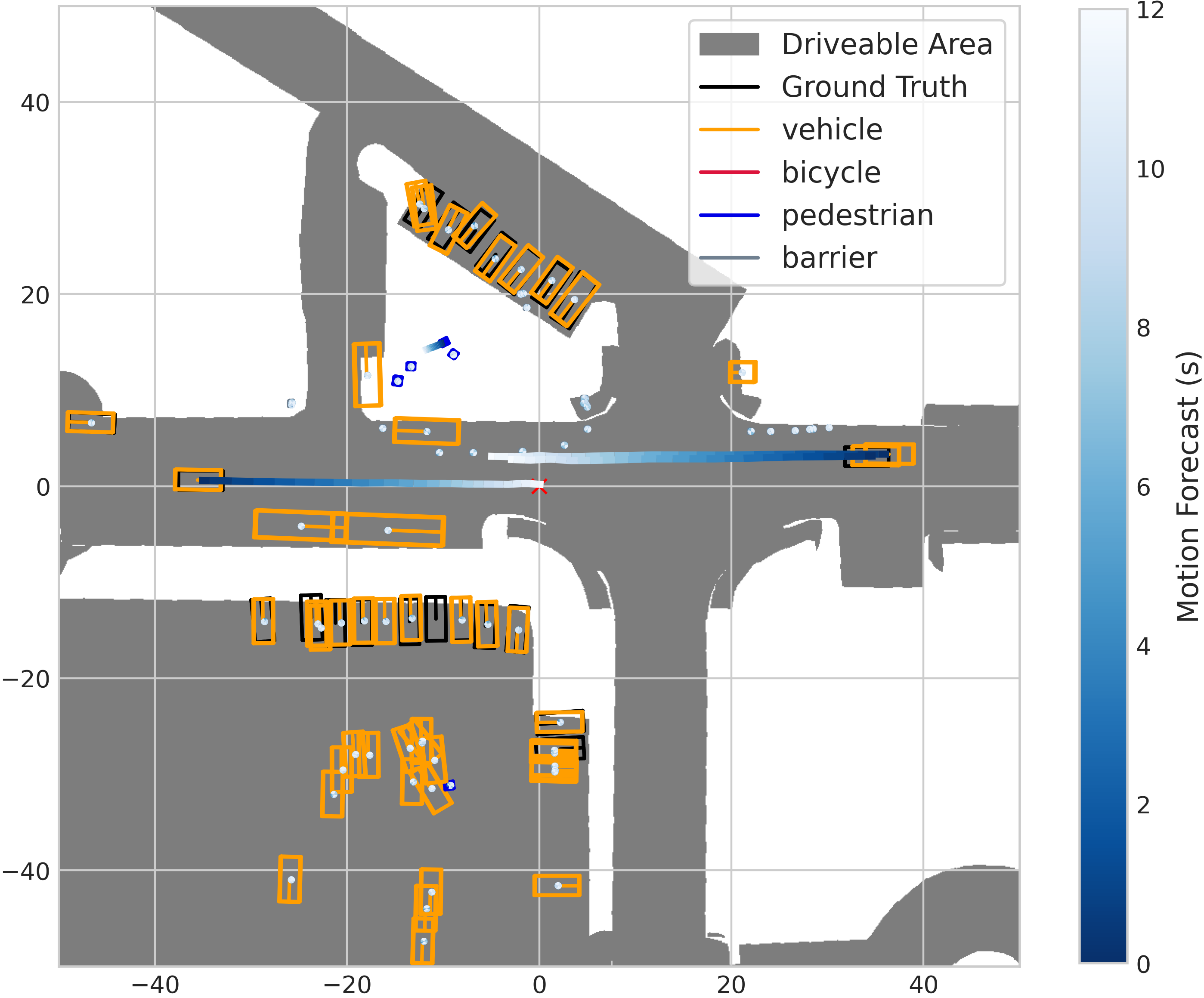

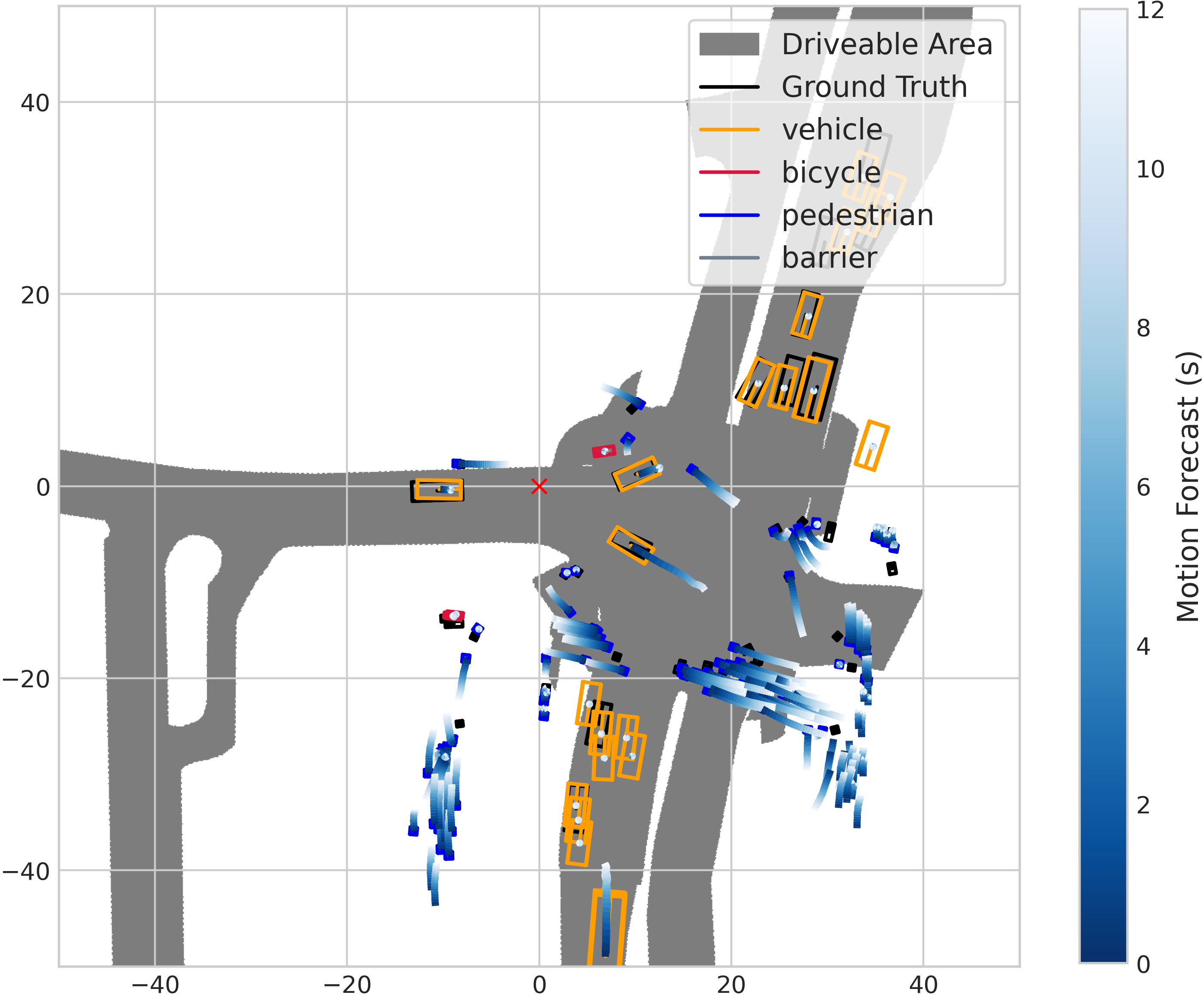

ForeSight unifies 3D object detection and motion forecasting in a single streaming framework. A shared, bidirectional memory lets forecasts inform detections and vice versa, improving accuracy and temporal consistency in complex scenes.

The transformer-based design eliminates explicit tracking, reducing error propagation. ForeSight streams queries across frames, using past detections and forecasts as priors to strengthen current predictions.

On nuScenes, ForeSight sets a new bar for end-to-end prediction accuracy (EPA), outperforming UniAD by +9.3%, and improves detection with a +2.1% mAP gain over StreamPETR—while remaining efficient for streaming inference.